Apple on Tuesday was awarded a patent covering the detection of human faces in digital video feeds by leveraging depth map information, technology that could be a building block of a face-based bio-recognition system rumored to debut with this year's iPhone.

Granted by the U.S. Patent and Trademark Office, Apple's U.S. Patent No. 9,589,177 for "Enhanced face detection using depth information" describes an offshoot of computer vision technology that applies specialized hardware and software systems to object recognition tasks, specifically those involving human faces.

The invention is part of a patent stash acquired by Apple through its 2013 purchase of Israeli motion capture specialist PrimeSense. A steady stream of PrimeSense IP has been trickling through the USPTO in the intervening years, including patents for 3D mapping, a 3D virtual keyboard and more.

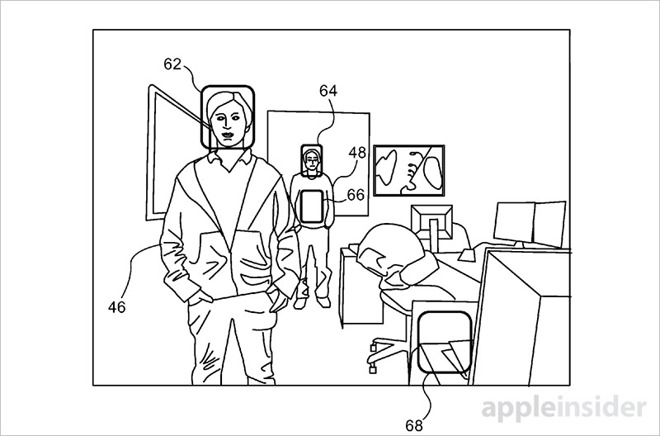

As noted in today's published document, face detection algorithms can be defined as software capable of scanning a digital image and extrapolating whether a portion, or "window," contains a face. Applied to a dynamic scene, or in some cases live video, the operation becomes increasingly complex as faces can appear at different locations and at different depths.

To adequately monitor a given scene, a conventional system simultaneously samples multiple candidate windows of different sizes. The need to process a plurality of windows not only requires more computing power, but might also result in an increased false detection rate.

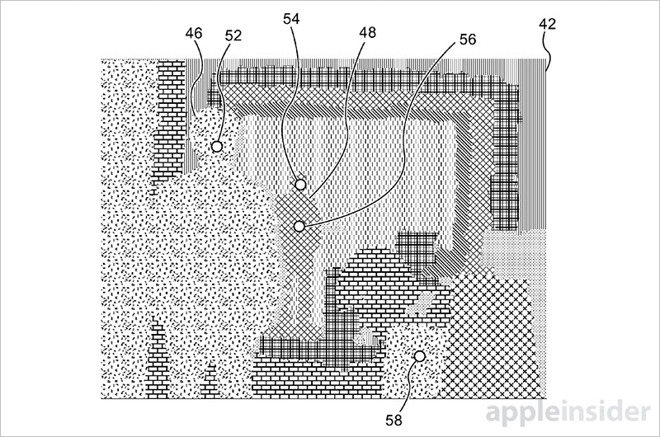

To cut down on processing overhead and potential false readings, Apple proposes applying depth information to existing face detection algorithms. As outlined in some embodiments, a depth map can be used to intelligently scale window sizes according to their depth coordinates as based on a "standard" face size.

In the patent language, a specialized infrared light emission system projects a pattern of optical radiation onto a scene. The patterned light is captured, processed and converted into a corresponding depth map.

The depth mapping system referenced in today's patent is based on infrared motion tracking technology developed by PrimeSense, similar versions of which are already in use with hardware like Microsoft's original Xbox Kinect sensor.

From the perspective of a device, a human face becomes larger or smaller depending on its relative location to an onboard camera's objective lens. For example, to accommodate a normal sized face, a sample window two feet away from a device would need to be much larger than a window determined to be five feet away.

Since the depth of each window location is known, only one test sample needs to be processed, thereby cutting down on computing loads.

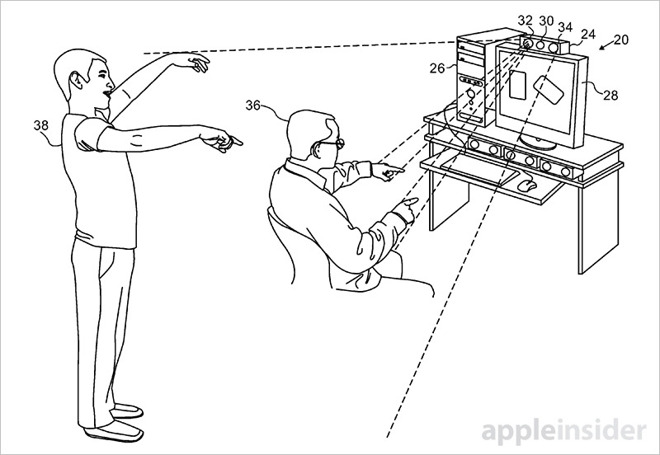

While today's patent grant describes a general solution for detecting human faces, it lacks contingencies for more granular identification. In other words, the detailed invention can determine whether or not a human face is in frame, but is unable identify to whom that face belongs. However, face detection is an integral first step in bio-recognition technologies, often serving as the trigger to more complex image processing.

Whether Apple plans to integrate the invention into a future product is unknown, but a similar solution might show up in iPhone later this year. According to the latest predictions from KGI analyst Ming-Chi Kuo, Apple is rumored to include a "revolutionary" front-facing 3D camera system in this year's OLED iPhone model. By integrating infrared transmitter and receiver modules alongside the usual FaceTime camera, the setup is said to be capable of accomplishing a variety of tasks, from biometric authentication to gaming.

Apple's face detection patent was first filed for in March 2015 and credits Yael Shor, Tomer Yanir and Yaniv Shaked as its inventors.

No comments :

Post a Comment